Blender Beginner -- Help needed with Expressions and Auto Lip Sync

wsterdan

Posts: 3,135

wsterdan

Posts: 3,135

I'm just starting to dip my toes in the Blender water and am hoping to get a little help, even if it's only to tell me where to read in the manual.

Disclaimer: I love DAZ Studio and I'm happy with the limited animation it does, but without a 64-bit lip sync option for Mac I'm starting to move over to Blender. I hate having a 32-bit iMac standing by just to do the lip sync using DAZ's 32-bit version of Mimic built-in. If they'd license the 64-bit version, I wouldn't be looking at Blender at all. It's frustrating that I can open up DAZ Studio 32-bit, do my lip syncing, save the file, open the 64-bit version on the same, 2012 iMac and both hear the audio and generate movie files in a single render with sound... but I can't do any of that with a newer machine.

I've installed Blender 4.3.2 for Mac and so far I'm trying to limit the Blender add-ons I install while I work my way through building a workflow.

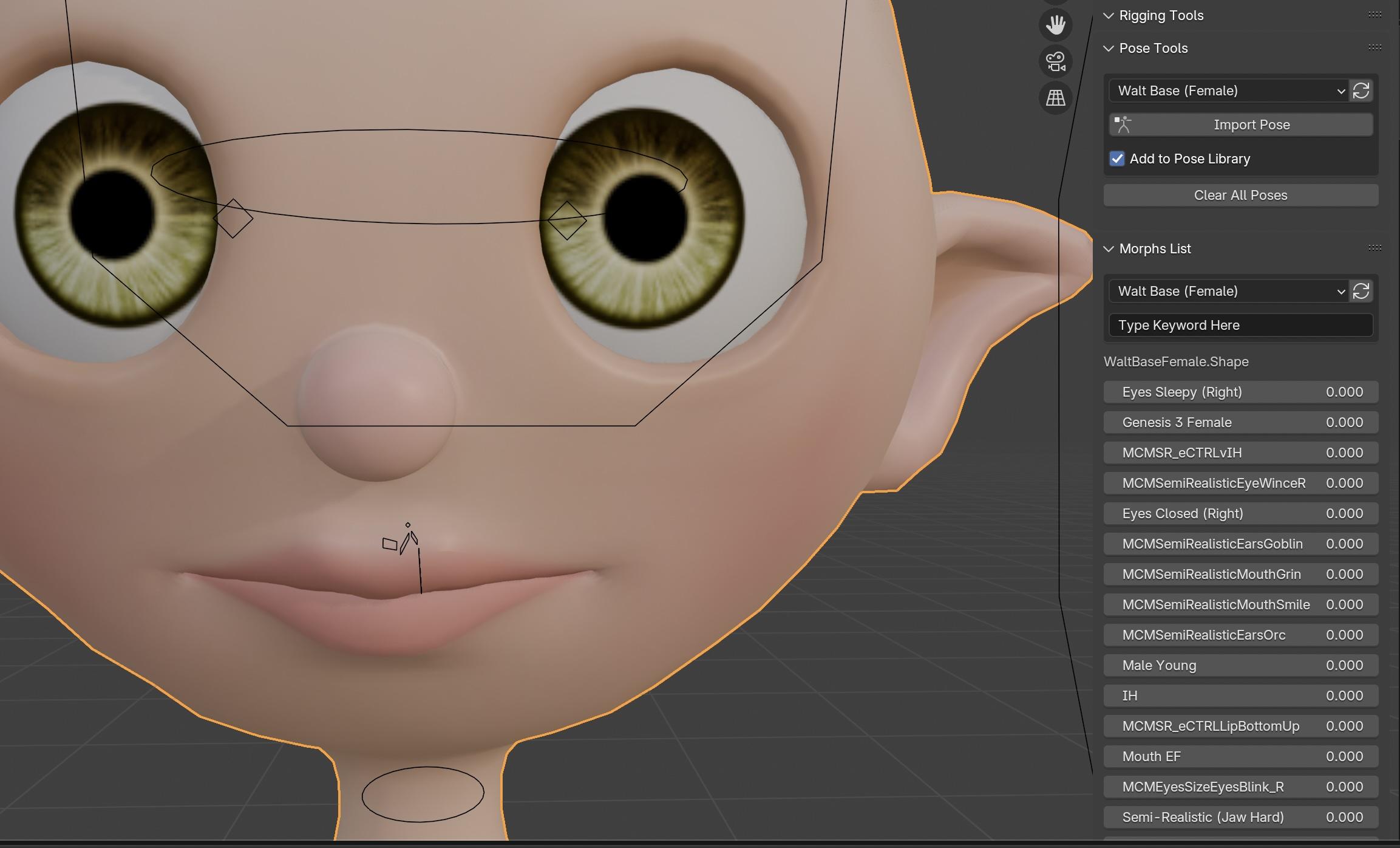

To this end, I've taken a custom character and using DAZ's Send to Blender option I've moved the character into Blender with only minor issues.

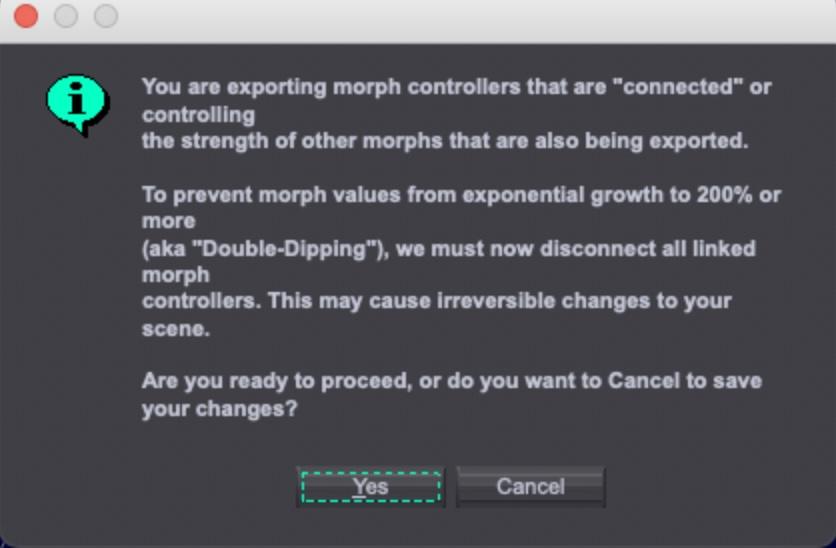

The main issue is the apparent inability to automatically move Expressions or combined morphs (morph "controllers" that are "connected") into Blender when transferring the character. For example, a blink morph for the right eye or for the left eye come through and work perfectly, but a combined "blink eyes" morph doen't. Blender is worried, apparently, that it might be added at the same time as one of the individual morphs. Is there any easy way to override this when doing the "Send to Blender" option, or to *easily* add them back in once the character has been moved?

Second, with the mouth morphs transferred over to Blender, what would be the easiest way to to auto lip sync? Ideally, I'd love the same easy method that Mimic uses inside DAZ Studio 32-bit, but recognize that there's probably no Blender equivalent. I'm not interested in any camera-tracking option, just the quickest, easiest way to mimic (pun unintentional) the way I do it in DAZ Studio.

I'd like to get a simple workflow set up where I can work on some simple animations while learning Blender and its animation capabilities in more depth.

Finally, is this workable using the morphs that I'm transferring over, or am I looking at absolutely looking at having to re-rig, add armatures, etc.?

My animations tend to be more dialogue-driven than high-end action sequences, I'm open to any advice that will help me get there as simply and smoothly as possible. I don't mind investing in any plug-ins and add-ons that will help, but don't want to install things that I won't use. I'm all about the K.I.S.S. methodology where I am most definitely the second "S".

Thanks.

Comments

For clarification , are you saying that Daz studio on M series macs will not read a .duf facial animation file from 32 bit Daz studio?

I can't test it as My only Apple device is a 2017 Imac and 32 bit .duf files mover over fine from my PC

No, I'm trying to stay away from 32-bit DAZ Studio completely; I'm trying to replace the workflow of using the 32-bit lip sync in DAZ Studio and instead, do it all in Blender, by moving my DAZ characters (DAZ store characters and my own, custom models) to Blender and doing the lip sync and animation in Blender.

Ah, I think I see what you were asking, sorry I misunderstood your question. When I did my 10 minutes of sci fi animation, I used that method; I used a 32-bit version of DAZ Studio to lip sync all of my audio files (about 70 of them) and saved out partial poses of just the heads and necks, then applied them on my character models on my old M1 iMac to speed up rendering (though I started out just doing it all on an old Intel MacBook Air strictly as a proof-of-concept).

I had no issues applying .duf facial animation poses from 32-bit DAZ Studio at all, other than the whole pain-in-the-rear of keeping a 32-bit Mac *just* to do the lip synch, then moving it to a 64-bit newer machine to render while both not being able to insert an audio file to help animate the parts of the scene that weren't keyed by the Mimic-based pose file nor being able to output a movie file with the sound included but instead needing to output a series of frames, combine the frames into a movie file and *then* adding the autio track to it.

In 2012*, I could open a file with a character in it, apply an audio file to the character and lip sync it, add any other tweaks or animations while listening to the sound track to time it and then output a single movie file with audio and video.

Now, I can't do *any* of that, but thanks to modern hardware I can do none of it many times faster.

* I should clarify that I can still do that, if I want to use my old iMac. Before packing it up for storage, I ran some tests where I tried to see how far I could push it. I was able to use the 32-bit lip sync and render out about 15 seconds of movie in the 32-bit mode; anything longer longer just stopped rendering the video and repeated the last frame until the end of the audio (even though it played normally in preview). I then saved and opened the same DAZ Studio file in the 64-bit version of DAZ Studio and I was able to output a 29 second movie file, video and audio, directly from DAZ Studio (777 megabytes in size). I *wish* I could do that on my new machine with the latest versions of DAZ Studio.

I see

Sadly My friend I have no knowledge of the Daz to Blender addon

As I use the (windows only) Diffeo addon which supports multiple facial animation options in Blender.

Regarding Blender native options

first: As you know none will be as simple as importing and audio file and have the Character speaking like Daz mimic

second : any blender native option will require rigging of the face with specific blend shapes.

The most powerful option on the market today in that regard (which I have also),

is the Faceit addon from Blender Market

but its initial set up is a bit complex.

https://blendermarket.com/products/faceit

Thanks for the info, I'm hoping to avoid face tracking if I can help it; I'll look into this as an alternative, though, it looks very powerful with a mass of realistic options.

Is the Windows Diffeomorphic itself different from the Mac version, or are you using a Windows-only add-on to Diffeo?

Thanks again.

Diffeomorphic a python script so it runs both on windows and mac/linux. In the global settings there's an option for case sensitive paths. Apart FACS you can also use papagayo for lipsync with audio files, it's runtime > facial animation > import moho, that's more similar to what you're asking for. For the full features you have to get the three modules import + mhx + bvh.

https://bitbucket.org/Diffeomorphic/workspace/projects/DIF

https://bitbucket.org/Diffeomorphic/import_daz/wiki/Home

Ok good to know, I had mistakenly assumed that Diffeo was windows only based on another Linux & Mac user’s posts here detailing his various challenges.

indeed if @wsterdan

can import genesis figures via Diffeo on his silicone Mac, then all he needs is the 64 bit version of Papagayo and he can use his audio files to create the Moho.dat files to lipsinc his characters in Blender.

https://www.lostmarble.com/papagayo/

Thanks for the suggestions, I'll look into them later today. Much appreciatd.

Just chiming in to endorse the FaceIt addon, as well. It's amazing. The quality is already decent out of the box, and proportional to the amount of time you spend tweaking (its workflow in nondestructive). Then you can load FACS data from facial capture apps or NVidia Omniverse's Audio2Face. That's kinda close to the DS lip sync app you guys refer to, isn't it?

Thanks for the additional endorsement, FaceIt is on my list of things to investigate.

The Mimic-based DS lip sync, for me, is a little different in that it does a very good job for what i want to do and it does it without requiring camera input or face tracking. I've only done a very little face tracking and I find it too tedious to "perform" when I've got multiple voices, or when a 10-minute animation has nine minutes of dialogue. Part of that is me being lazy, but I do recognize that face tracking is capable of better animation quality than I output with DS Mimic; I'm willing to accept a decrease of quality in favour of ease of use and time savings.

The DS lip sync involves loading a character into a scene, loading an audio file and a text file with the dialogue, and hitting "analyze" (where it asks you to load in a .DMC file for the character, a generic text file provided with most Genesis models that describes the morphs and visemes). It then does (as far as I need) a very good job of doing the lip syncing while also adding eye blinks and slight head movement to make the character seem more "alive".

As mentioned, before I packed up my 2012 iMac I did a few tests; here's one where I loaded one of my Genesis 3 characters into a scene and used lip sync to generate the animation. I saved the file scene file from the 32-bit DAZ Studio, then opened it in the 64-bit DAZ Studio on the same 2012 iMac and rendered a .mov file that contained both the audio and the video (32-bit DAZ Studio would only output a .mov file with the first two seconds of video with the full 6 seconds of audio); I converted the .mov file to .mp4 to make it easier to reduce file size and make it easier to view.

https://sterdan.com/wp-content/uploads/2025/03/Professor-1080p-OGL-Blocks-n-Poses.mp4

To keep things in perspective, it took less than a minute to set up all the animation. Rendering, of course, took much longer (2012 render speeds).

As mentioned, if the current DAZ Studio 64-bit software supported their Mimic-based lip sync, allowed me to insert my audio files to help tweak the animation and could output a finished movie file like the one above ve, I'd be more than happy to pay well for it and just keep on using DAZ Studio. Using the 2012 iMac, I was able to output a single monolouge like this that was 29 seconds long and 777 megabytes in size (and, again, took less than a minute to set up). I miss that simple ability.

Since that's not going to happen, I'm trying to get as close to this ease of use with Blender.

Just saw your edit, fantastic! Thanks for the update, it looks like the way I'll probably be going.

Thanks again for the help everybody, I downloaded everything and tried out Papagayo and then set up and rendered a simple animaton in Blender. It worked as promised, and I was able to get a quick render out of it. I set up a scene similar to the one I did on the old iMac, but it'll take a while before I learn enough about Blender to get decent results. That's on me, no stain on Blender.

https://sterdan.com/wp-content/uploads/2025/03/Sira-0-150-Cycles.mp4

I also have to work with importing into Blender; I couldn't get my custom characters (as in the first post) into Blender so that the mouth morphs worked as shape keys. Lots to learn.

I'll have to find or write some small scripts to add random eye blinks and minor head tweaks similar to the ones that Mimic inserts, but thanks to your help the first hurdle has been cleared.

Custom morphs can be imported either as daz favorites or custom morphs.

https://bitbucket.org/Diffeomorphic/import_daz/wiki/Setup/Morphs

@westerdan

Strong start for a person new to Blender

Congrats!!

FYI you can always import head and body motions from Daz studio (.duf) to your diffeo imported characters

via the "import action Panel".

I did a video on the many lipsinc options a few years ago

the Diffeo/MOHO.dat option is near the end hope this helps

Thanks Padone and wolf359 for the additional follow-up. As a very first render/animation in a program I've never used, I thought it came out "okay", more of a proof-of-concept than something I'd use to show off, but it didn't take a lot of work thanks to your help, Papagayo and Diffeo. I actually spent more time figuring out where all of the render output settings were and moving the light and camera around than I did importing the character and set and creating and applying the Papagayo .dat file.

Now it's time to start looking at tutorials rather than my normal, very random trial-and-error methods.

Thanks again.

Although @wsterdan asked this question, I'm following it with great interest and appreciation!

tnx all,

--ms